thutson3876

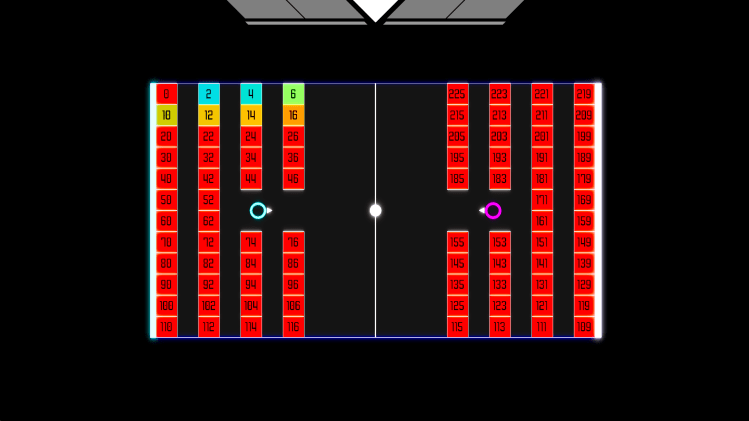

PolyPuck is a game prototype made in Godot where a player battles against an advanced AI in order to break blocks until they can score against their side.

The core of this project resides in the AI the player goes against. It uses Goal Oriented Action Planning (GOAP) in order to adapt to the player and the board state.

Additionally, one bot simply isn’t enough. A branch of PolyPuck uses UDP sockets and a custom Python SDK to allow developers to set up their own machine learning bot to train in-game, and play against players or other bots.

Curious about how the game was developed?

Key Features

- Machine Learning

- Bandwidth Optimization

- Protobuf Implementation

- UDP Sockets

- Server/Client Communication

Server & Client Setup

UDP Setup

The game itself acts as a host, listening for a client on a specified port. Once a connection has been established, and the game starts, the server will begin to send updated information about the current board state.

The client then stores the server data to be used in determining the bot’s next actions. After a set interval, the client sends these actions to the server to be interpreted and executed.

An example of this would be the server sending an updated puck position, the client setting the bot’s desired position to be at that new position, and then sending it to the server. Once the server receives it, it would begin moving the bot towards that desired position.

Protocol Buffers

Since data is being sent and received via datagrams, and across multiple programming languages (Python & C#), Google’s protobuf is used to serialize it. The client and server both use a CustomVector type which contains an identifier, and a vector of floats that can be used to send variable amounts of data.

This also allows a bot to be written in any of the many different languages that protobuf supports.

Bandwidth Optimization

Necessary Information

When information is updated, its new and old values are compared. If the difference is below a certain threshold, the data is not sent. This also accounts for floating point errors, so long as the threshold is of a reasonable value.

Map Updates

The largest single block of data that needs to be sent is the game map. While it would be possible to send the seed and have the client generate its own copy, any changes to how the noise is generated would invalidate such a process.

Instead, at the start of the game the server sends the grid as a flattened array, with each index representing a different block (as shown below), and its value being the health of that block. Whenever a block is updated, a vector with its index and new health is sent to the client.

Machine Learning

The bot has a plethora of different rewards and punishments in order to encourage it to perform certain behaviors. For instance, it has an incentive to keep the puck on the opponent’s side of the map, and is punished when it fails to do so.

Initially, the bot didn’t have much to go on as it was exploring, testing random inputs. After much trial and error, it tended to move towards the top right position, where its defenses were at their weakest.

Proximal Policy Optimization

The bot itself is comprised of a neural network, which uses Proximal Policy Optimization (PPO) as a reward model. Since PPO works well with smaller sample sizes, it allows for more efficient training. With each game match taking about 2 minutes, this efficiency is crucial to training the model quickly.

Additionally, the high computation cost of PPO is mitigated due to the separation between the game server and bot client. Since the machine running the model is not running the game, it has more resources readily available to train and react to game data swiftly.